Fox News and other television and online outlets long ago proved the appeal of confirmation bias — the idea that audiences like to hear what they already believe. But a new study in the journal Science shows that the same holds true on social media, where more and more people are finding their news and increasing personalization driven by algorithms might, in theory, threaten to close people off from ideas that compete with their own.

Researchers at Facebook and the University of Michigan looked at how three different factors affect the ideological diversity of the stories users see and click on in their Facebook feeds. “The media that individuals consume on Facebook depends not only on what their friends share, but also on how the News Feed ranking algorithm sorts these articles, and what individuals choose to read,” their study explains.

Related: What Facebook's Zuckerberg Does During His 60-Hour Workweek

To see how those factors played out in, the researchers examined the activity of 10.1 million anonymized American Facebook users across 7 million different web links shared on the social network over a recent six-month period. Those links were classified as either “hard,” meaning national and international news and politics, or “soft,” meaning sports, entertainment, travel. (Only about 13 percent of the links shared fell into the “hard” category, which might indicate something right there about the future of the news industry and/or the country.)

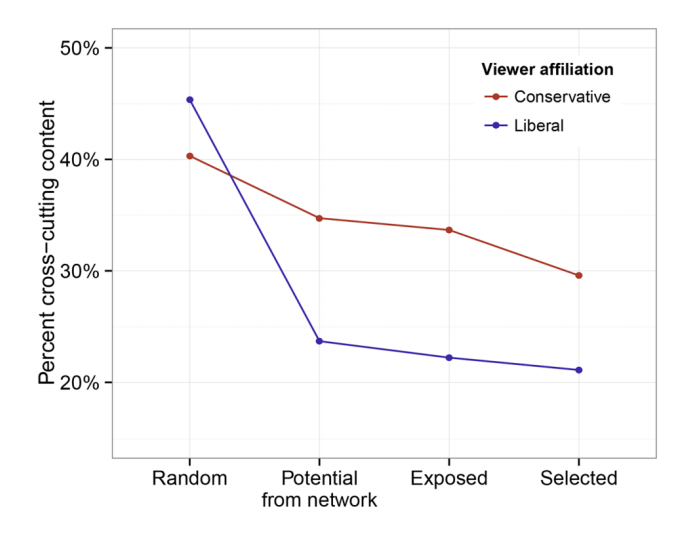

The first of those factors — what friends share — works to narrow the range of ideas Facebook users on both sides of the aisle come across, with the effect greater on the left, according to the study: “Despite the slightly higher volume of conservatively aligned articles shared, liberals tend to be connected to fewer friends who share information from the other side, compared to their conservative counterparts: 24 percent of the hard content shared by liberals’ friends are cross-cutting, compared to 35 percent for conservatives.” On top of that, the most frequently shared links were clearly aligned with liberal or conservative thinking, meaning less partisan stories didn’t get spread around as much.

Facebook’s algorithmic News Feed — which aims to show people content that they will like — also plays some part in reinforcing those ideological barriers, as the study finds that conservatives see about 5 percent less content from the other side of the spectrum than what their friends share while liberals see about 8 percent less purely as a result of how stories are ranked and filtered.

Still, the researchers conclude that users themselves play a greater role in creating their own news echo chambers: Individual choices about what to click on reduced exposure to ideas from the other side of the political spectrum by 17 percent for conservatives and 6 percent for liberals.

In other words, despite the proliferation of news and opinion sources — and despite the fact that Facebook friendships can and do cut across ideological lines more than 20 percent of the time, on average — people tended to close themselves off by not clicking on stories that clashed with their own thinking.

In an analysis accompanying the Facebook study, David Lazer, a professor of political science and computer science at Northeastern University, notes that changes to Facebook’s algorithmic curation — and to Facebook users’ behavior — could turn a small effect today into a large one tomorrow. “Ironically,” he writes, “these findings suggest that if Facebook incorporated ideology into the features that the algorithms pay attention to, it would improve engagement with content by removing dissonant ideological content.”

That and other political implications of Facebook’s social algorithms bear monitoring, Lazer writes, but the study makes clear that if Americans are going to break out of their echo chambers, they’ll have to change their own behavior. Until then, we have plenty of pictures of cute kittens and precocious babies to share.

Top Reads from The Fiscal Times: